- Image via Wikipedia

The Economist is my favorite newspaper. It’s also one of the only profitable news publishers. Why is it successful while the rest of the industry is gasping for air? It’s not because they’re extremely innovative in how they charge for or deliver content. They don’t have a splashy online presence although they slowly waded into crafting online-only content that takes advantage of the web’s interactivity. They don’t have a fancy iPhone or iPad app yet. The simple reason for their success is the content itself.

There is no close substitute for The Economist‘s product and so people are willing to pay for it. They cover business and political news with a breadth and depth that is unequaled, and because they are a weekly, they can chew a bit more on their stories before publishing. Most importantly, they are transparent about their views (socially liberal, fiscally conservative). They take a side on every issue but deliver arguments in an even-handed way, enabling the reader to agree or disagree with the facts and data presented. They don’t even publish bylines, making it all about what is written and not who has written it. Since I like their style, and would love to read more news written this way, I thought I’d briefly deconstruct a typical article to see how their editors tick.

Each article’s headline is brief and opaque (i.e. “Race to the bottom” or “An empire built on sand“) or non sequiturs (i.e. “No, these are special puppies” or “Cue the fish“). Sometimes the headlines read like the Old Spice guy wrote them (“I’m on a horse”), but that’s part of dressing up what could otherwise be dull subject matter. The non sequiturs force you to read on just so you can understand what they’re talking about.

The sub-header is all business. There’s no fat and they read like well-crafted tweets. For an article on genetic testing, one reads, “The personal genetic-testing industry is under fire, but happier days lie ahead”. The subheader is usually one or two sentences and plainly states the paper’s view on the issue (“Google has joined Verizon in lobbying to erode net neutrality“). I really like this method because at bottom all journalists have an opinion, so why not be transparent and lay it bare? By laying out the conclusion in a couple sentences upfront, it also allows the reader to get something even if she’s just paging through.

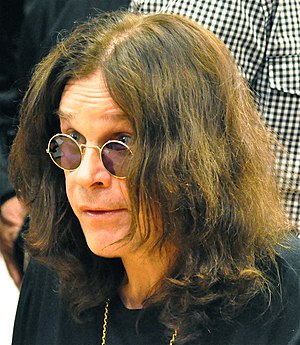

Many times, the lede introduces the article with a story or quote. In the article about personal genetic testing, it starts with a quote from Ozzy Osbourne, “By all accounts, I’m a medical miracle”. It gets the reader interested and humanizes what would otherwise be a purely technical subject.

The next paragraph or two include a strong argument in support of the subheader. In the genetic testing article, the author writes about how Osbourne “is not alone in wondering what mysteries genetic testing might unlock”. It goes on to say that Google is invested in a genetic testing company and that Warren Buffett himself has been tested. It discusses the promise of people understanding their risk for getting a disease and how that information holds great promise for treatment. At this point, the reader may be wondering how anyone could oppose genetic testing.

Not so fast. In typical Economist fashion, the next paragraph eviscerates the thesis of the article. While the subheader supported genetic testing, the next paragraph cites a damning report from a credible source that found many test results were “misleading and of little or no practical use to consumers”. Game over. At this point, the reader is scratching her head thinking, “I thought testing was supposed to be a good thing”?! By so willingly presenting a counter argument, The Economist strengthens the credibility of the view it supports in the subheader. It’s an effective writing tactic and their use of it has made me a more critical reader, forcing me to always search for the other side of the story.

The bulk of the article then presents a series of facts from either side, filtering each one through the views presented in the subheader. The facts are laid out, but placed in context. The reader can decide which facts are convincing and which might be discarded.

The final paragraph is almost never definitive. It summarizes the main arguments and concludes with an open question of whether or not The Economist‘s view will come to pass. It’s not that they waffle, they’re just practical. And they don’t play the prediction games that all the cable news talking heads play. I think it’s a refreshing approach that lets readers decide on the future for themselves.

![Reblog this post [with Zemanta]](http://img.zemanta.com/reblog_e.png?x-id=bfd9b5ac-f3f3-4301-9b7f-351c8a089611)